Giovanni's Diary > Subjects > Writing > Reflections >

My thoughts on AI

As the year 2025 came to an end, I wanted to write down my current thoughts on AI and the world in general. For some years now I have been observing the industry and reflecting on the benefits and risks of the technology before maturing an opinion of my own. I still don't have a clear mind about it, and things change continuously; I may realize that all my reflections were wrong. But I think it is worth making an effort to summarize my hopes and fears, and my reading of what is happening in the world, as a checkpoint of sorts. Don't expect any bold claims or visionary CEO-like statements as I am not that type of person.

The tech-nerds did it again, they revolutionized the way we work through technology, one that affects all areas of life far beyond the tech world, making the AI-thing a topic of discussion for the general population. AI is not only a theoretical thought or a piece of science fiction, but it has been already integrated in many areas of our work-life and personal life, with the young generation being affected the most.

It still captures me how humanity is able to keep inventing and innovating, is this something profoundly coded in human nature?

Market (and geopolitics)

The market in the last few years showed that competition is strong as companies invest and adapt quickly to the new status-quo, with a remarkable speed and force I have to say. This is part of the Silicon Valley culture which made them so succesfull and central in the USA and global economy. The tech market clearly went all-in on Altman's vision, showing elasticity and an attitude to restructure and take risks. Many governments like USA and the EU now recognize the technology as a matter of national-interest and are highly invested. New players like Anthropic and several Chinese companies have placed themselves strongly in the market especially with open source models, showing signs of an healthy economy, while the usual big companies keep their central position and influence (as expected).

China is advancing rapidly and has shown to be able to compete with American companies on software and services, I expect this to continue at a fast pace. All eyes are on USA and China right now, Trump is right to worry about it. The manufacturing power of China, their rich resources, high capacity to produce electricity, and the control of the communist party over the population and its narrative is making China incredibly competitive, while Europe continues to lack behind in every respect. Still, USA leads the way in developing and delivering the new technology to costumers. More broadly, USA showed that it is willing to go to great extents to protect their interests.

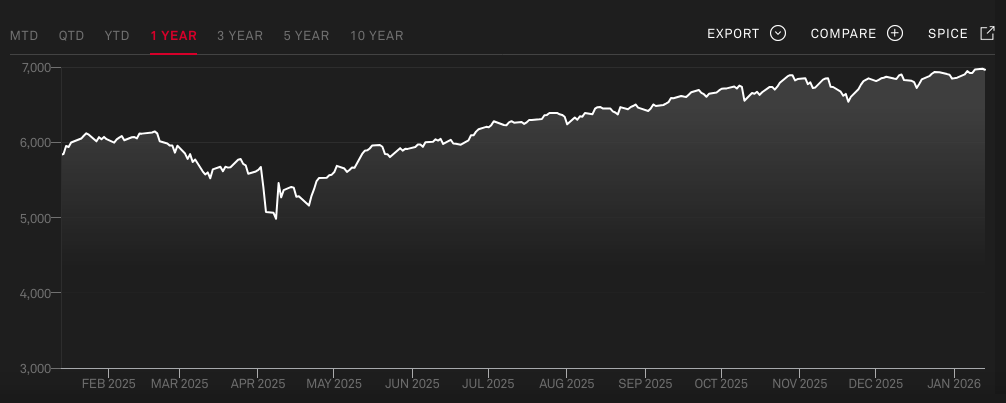

I don't know if at the end of the Trump administration USA will be in a better or worse position. His focus on protectionism and national-interests may be a great opportunity or a failure and cause of global uncertainty. While there was a slight fall in the SP500 due to rapidly changing tariffs, the index quickly recovered and is going as strong as always. TSMC is building major semiconductor factories in USA. Tech is going strong but it is highly exposed to AI investments; there is a rising concern that the market is growing a bubble that may explode sooner or later, which would damage the American economy, but this is just speculation.

Figure 1: SPX during Trump's 1st year

Europe is outside of the international picture, mocked by all players. The EU continues its decline and shrink, its failure to innovate and be competitive together with years of damaging policies like the Green deal, the shutting down of atomic power plants and car industry crisis in Germany, together with sanctions on Russia and an aging population made the union's economy unproductive. Europe's non-investments in military and the reliance on American technology and military protection has made it weak. In a world where international law is but a piece of paper, Europe is un-armed and surrounded by dangerous wolves.

I think there are two different analysis on artificial intelligence. One is purely technological, the other one is the social impact it has. As a programmer and a technical person, I am more akin to spend brain time in the technical part, as I feel more comfortable about it. Still, it is important to reflect about how society will evolve too.

As a technology

AI was made possible by the intersection of ground-breaking research, the availability of compute and the willingness to throw huge amounts of capital in the technology. The Silicon Valley has always excelled in these things. It would not be possible to run all these LLMs at scale without big datacenters run by massive cloud providers globally, the advancements in semiconductors manufacturing and in datacenter hardware. Power production limits scalability, but this can be solved by throwing more money at it and by having good government policies that are favorable to companies.

AI is a new way of learning. It is now easier than ever to get answers to complex questions. Instead of desperately searching online in some old forums, or trying to find extracts from books that may answer our question, the AI will quickly provide a well-explained answer with the opportunity to ask more questions and go deeper (with references sometimes). RAG helps to consult large collections of documents, such as software documentation. Obviously we shouldn't be naive and take everything the AI generated as truth, much like when we read something on the internet. As long as we keep this in mind and remain critical thinkers, AI is an useful tool to our curiosity and imagination.

AI is a new way of working, especially for software developers. The development of AI agents is a new layer over the already complex landscape of software. If you are working in web backend / infrastructure, you have to understand and keep up with the technology. For all the other developers and creators, AI is an helpful tool that enhances our abilities to create. It helps companies and individuals be more productive, cutting costs and time if used wisely and effectively.

Multimodal AIs keep getting better and better. Talking is smooth, and image generation is decent - which is now used in commercials, advertisement and magazines. I noticed how much this technology has been adopted when the primary users of these types of generation are regular people, not only corporations.

I was walking in my home town and I noticed here and there some AI-generated manifests about local events or meet-ups - things like chess courses or small concerts. In my family, everybody is using AI even if they are not tech people. Similarly in my university, AI fully reached global adoption. Indeed, everybody with a commercial device and an internet connection can use these services, which explains their huge growth. Never have I haver seen a technology integrated so fast, maybe the only example I can think of is Zoom during covid.

With open source models it is now possible to fully develop models and agents locally, and the rise of small models and AI-optimized hardware makes it possible to run them locally on commodity hardware. In general, consumer electronics in 2025 is powerful enough to run decent models, a triumph of technology. For me, this is just another system to thinker with like any other, not much has changed.

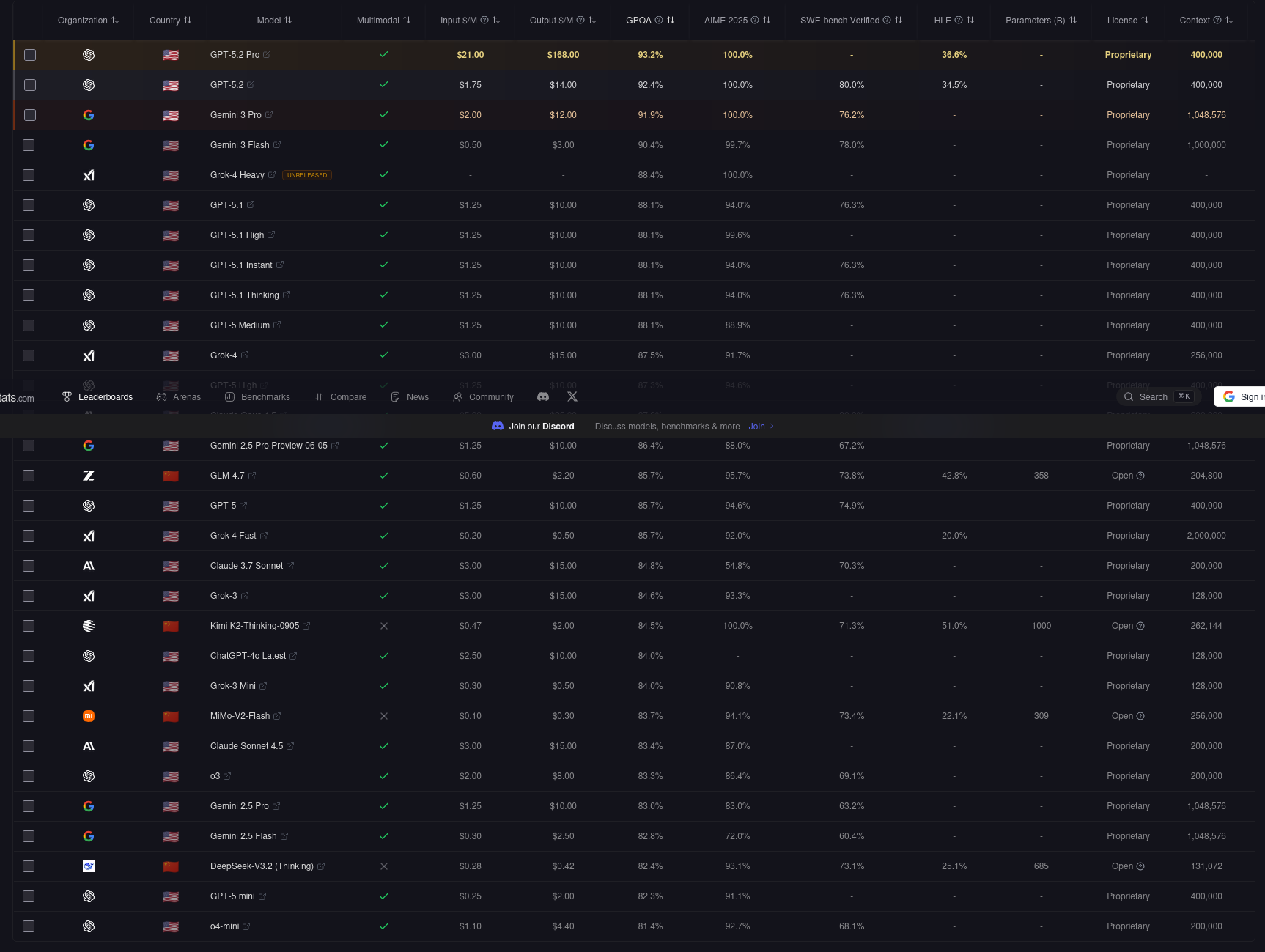

Figure 2: LLM leaderboard as of 14-01-2026

The most popular models in 2025 were ChatGPT, Gemini and Claude.

Impact on Society

While I am enthusiastic on the technological side, when it comes to its impact on society I am deeply concerned and pessimist at best.

We are now seeing many people, especially programmers, worried about getting replaced by AI. I think the reality is that managers will keep firing everybody they can and there will be fewer opportunities for junior positions. I think that a shift in mind-set of many (even greatly talented) programmers is inevitable: embrace the new way of software development. I don't see much of a difference from the direction the industry have been going for a long time: programming in big corporations was already stripped from any creativity and joy; the state of web development shows that good software is nothing but an old dream of the past, that knowing some javascript framework has more value than deep technical knowledge. I see a similar situation on the cloud community and in how the industry talks about AI.

I deeply love writing code and figuring out things on my own - and I will keep doing this in my own projects - but we need to accept that coding as a job is mostly automated by AI and companies only care about dev speed and cutting costs to increase margins. If ever an AI will be so good to code something as complex as the Linux kernel on its own, then these companies would fire every single programmer left. This causes a lot of frustration and pain to the people passionate about code and the old way of software development, who made their passion part of their identity. I put myself in this list. I think this is similar for other artists and creators, the reality is that these activities are largely automated in large businesses so you may struggle to find a job. Still, the human drive to keep creating will never die, and people will keep painting and composing music, just like people still play chess even if the game is completely solved by AI. Yet I cannot think of this as a positive change, even if it is completely rational from the company's POV.

A greater issue is that people are relying on AI more and more - even for basic tasks. I see this in my younger brothers, AI is over-used everywhere. The human mind is lazy, it is easier to get dopamine from entertainment than to learn hard things. This is why short-form content is so popular, and I think this is very negative overall ("brain rot"). Having an AI technology we depend on is extremely dangerous in these circumstances if adopted from a young age.

Will we really be able to deeply and intimately understand and reason about things by using AI? For an experienced person the answer is probably yes, and he will take great advantage of AI to write things that it would have taken a long time to do manually. But for the student, for the junior, I think raw tinkering and experience in actually DOING the thing is incredibly valuable. This is what I have always thought and this is how I learn things. My challenge is the following: when ever do we stop learning? If the best way of learning is doing things by yourself, then at what point do we stop doing things by ourself and instead let an AI do the job for us? I want to keep learning.

The fall of critical thinking is a bigger problem that has been rising way before AI was a thing. We don't practice critical thinking and rationally evaluating our ideas, instead we are increasingly distracted by entertainment and polarized by social media. Public schools don't teach how to think, instead in Italy schools are based on memorization and frequent notional tests. AI is just makes things worse.

No wonder why therapy by psychologists is so popular - even for regular people without mental illnesses.

Additionally, the content generated by AI is of really low quality. Still, we are flooded by articles, blogs and news completely and effortlessly written by an AI. As I said, the human mind is lazy and if it can find a way to not put effort in something, it will do it. This makes for the phenomena of "slop" content we see everywhere. Most of the internet and social media is unreadable and unwatchable and I think it is deeply unhealthy to spend time online. This is really sad but it is the reality and we have to live with it, but my concern goes to the younger generations who may find it difficult not to use AI systems.

I see the overall quality of everything going lower and lower, and good things are rare and difficult to find, outliers. I don't think this is a good direction.

But my concerns are about how AI will be used. If you are smart and educated, AIs are really great tools. For this reason I think that overall this is a great technology which is worth developing and improving. It all comes down to the user, much like the internet, or any other technology.

The greater issue for our democracies is extreme political polarization. I see this often in my university, where people are violently and extremely concerned about some problems (most of them aligned to left / extreme-left ideas) but completely blind and adversary to other opinions and world views. This is dangerous as it can only get worse as social media promote the most extreme opinions for more clicks.

What is a programmer - What it means to be human

I have always valued deep technical knowledge, critical thinking and having a balanced perspective. What I see outside is the complete opposite, the AI may be able to do many things, but it feels empty and shallow. I think to be human is to feel things, make things that make us proud, or any other emotion really. The programmer - as a job - will be more towards understanding software rather than writing it. Understanding will still be valued and your abilities augmented by the technology.

I will not go into existentialism philosophies and other talks about civilizational collapse in the age of AGI as they serve no purpose but to distort reality or shape a narrative. It is much more useful to look at what we have, understand and realistically wonder where we'll be in some years.

Travel: Reflections, Index